Sep 24, 2024

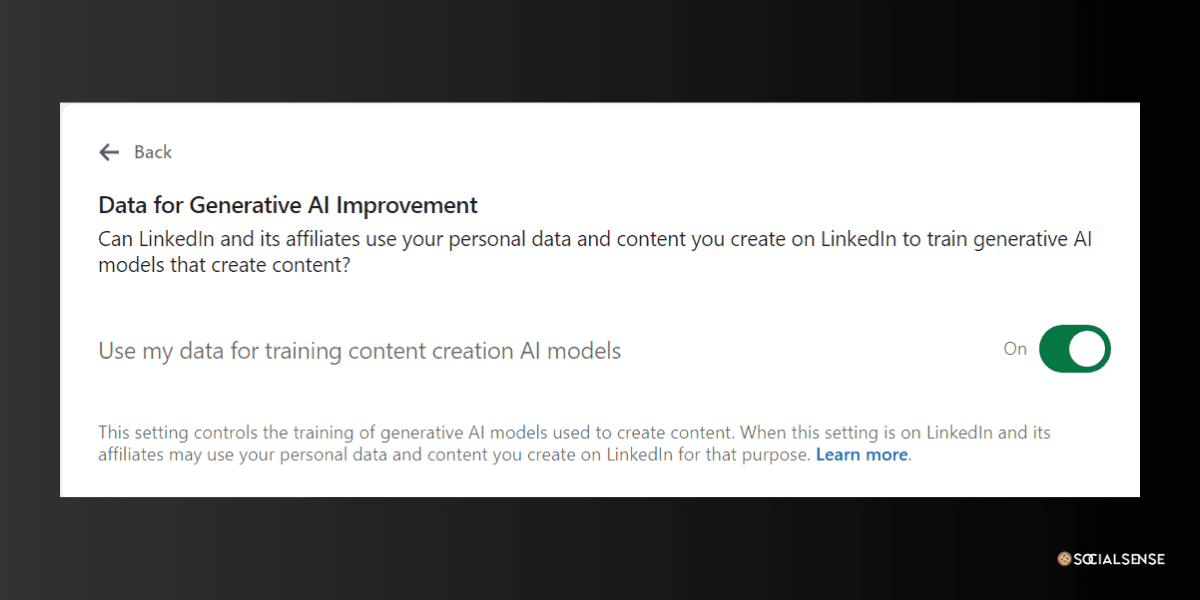

In a digital landscape where data privacy is paramount, LinkedIn recently found itself at the center of a significant controversy. Just months ago, the platform stirred outrage among its UK users with a sweeping change to its AI training policy. The decision to automatically utilize personal data for AI model training unless users opted out led to widespread backlash, prompting LinkedIn to rethink its approach.

The Controversial Policy Change

LinkedIn’s initial move aimed to enhance its AI capabilities by using user data to provide better recommendations and personalized experiences. However, the switch from an opt-in to an opt-out model raised serious concerns regarding user consent and data privacy. Under the new policy, user data—including personal, interaction, and usage data—would be automatically used for AI training unless users took action to opt out. This shift felt intrusive to many, igniting a storm of criticism from users and digital rights advocates alike.

Why It Mattered

In an era where users are increasingly cautious about their data privacy, the notion that LinkedIn would use personal information without explicit consent was met with alarm. Social media was flooded with protests, and digital rights groups swiftly condemned the policy as a violation of privacy rights. The UK’s Information Commissioner’s Office (ICO) also expressed concern and initiated an investigation into the matter, emphasizing the legal implications of such a policy shift.

The Backlash

The public outcry against LinkedIn's AI training policy was rapid and widespread. Users took to platforms like Twitter to voice their frustrations, with many highlighting the need for control over their own data. Campaigns launched by digital rights organizations further amplified these concerns, arguing for the importance of informed consent in data usage.

User Reactions

Social media served as a battleground for users expressing their discontent:

“LinkedIn’s AI training opt-out policy is a huge violation of user privacy! We should have control over our own data. #LinkedInPrivacy”

“I’m not okay with LinkedIn using my data for AI training without my consent. This opt-out model is a sneaky way to bypass privacy concerns. #DataProtection”

Regulatory Response

The ICO’s involvement underscored the seriousness of the situation. They announced an investigation to assess whether LinkedIn’s new policy complied with data protection laws, adding significant regulatory pressure to the backlash.

LinkedIn's Reversal

Facing mounting pressure, LinkedIn ultimately reversed its decision, transitioning back to an opt-in model for AI training. This move was met with relief and gratitude from users and advocates, highlighting the importance of user privacy in tech practices. In a statement, Blake Lawit, SVP and general counsel at LinkedIn, emphasized the company’s commitment to listening to its users and safeguarding their privacy.

What It Means for Users

With the new policy, LinkedIn users now have the ability to choose whether their data is used for AI training. The updated privacy policy is designed to provide clarity, ensuring that user consent is obtained before any data is utilized for this purpose.

Broader Implications

LinkedIn’s policy reversal has significant implications not only for its users but also for the tech industry at large. It serves as a reminder of the delicate balance between innovation in AI and the necessity of protecting user data.

Impact on AI Development

While the move towards an opt-in model enhances user privacy, it may also limit the data available for training AI models. This raises important questions about how tech companies can innovate while still respecting user privacy and complying with regulations.

Industry Trends

The controversy is part of a larger trend in the tech world, where companies are facing increasing scrutiny over their data practices. Many organizations are now exploring "privacy by design" principles, which integrate data protection into the development of AI technologies.

Future of AI and Data Privacy

The incident surrounding LinkedIn highlights a critical juncture in the relationship between AI technology and data privacy regulations. As regulators, like the ICO, continue to focus on data protection, companies will need to prioritize user consent and transparency in their practices.

The Path Forward

The need for ethical AI development that respects individual rights is becoming increasingly clear. As public awareness of data privacy issues grows, companies that adopt transparent practices will likely gain a competitive edge in the marketplace.

The reversal of LinkedIn’s AI training policy is a victory for user privacy and a testament to the power of public pressure and regulatory oversight. It underscores the importance of user consent and transparency in the tech industry, as well as the necessity for companies to navigate the evolving landscape of data privacy regulations.

To stay informed and engaged in this crucial issue, consider the following:

Review Privacy Policies: Understand how your data is being used on the platforms you frequent.

Make Informed Choices: Actively opt in or out of data-sharing practices based on your comfort level.

Advocate for Change: Use your voice to support stronger data privacy protections.

By remaining proactive, you can help shape the future of data privacy and ensure that your rights are respected in an increasingly digital world.

Join the conversation—share your thoughts on LinkedIn’s policy reversal and the broader implications for data privacy in the tech industry!

Jan 1, 2025

How To Be A Thought Leader On LinkedIn: A Practical Guide For Professionals

Shaily Dangi

Dec 31, 2024

How To Become A Recognized LinkedIn Thought Leader

Shaily Dangi

Dec 30, 2024

What Are LinkedIn Impressions and Why They Matter

Shaily Dangi

Dec 28, 2024

How To Use LinkedIn To Boost Your Personal Brand

Shaily Dangi

Dec 27, 2024

LinkedIn Lead Generation Strategies to Boost Your Business with Targeted Leads

Shaily Dangi

Dec 26, 2024

How To Leverage Personal Branding for Lead Generation

Shaily Dangi

Dec 25, 2024

How to Use LinkedIn to Find Better Job Opportunities and Build a Professional Network

Shaily Dangi

Dec 24, 2024

How to Write an Engaging LinkedIn Summary

Shaily Dangi

Dec 24, 2024

How to Promote Your Accomplishments on LinkedIn

Simren Mehra

Dec 21, 2024

How to Use LinkedIn Analytics for Personal Branding: Hidden Insights with SocialSense

Simren Mehra

Dec 21, 2024

How to Create Similar Posts on LinkedIn Using SocialSense: Enhance Engagement and Strategy

Simren Mehra

Dec 21, 2024

How to Track and Engage with LinkedIn Thought Leaders Using SocialSense to Grow Your Influence

Simren Mehra

Dec 20, 2024

How to Create High-Engagement LinkedIn Posts Using SocialSense’s ‘Similar Post’ Feature

Simren Mehra

Dec 20, 2024

How to Maximize LinkedIn Engagement with Targeted Comments: A SocialSense Case Study

Simren Mehra

Dec 20, 2024

How to Use SocialSense’s Tracking Feature to Stay Ahead on LinkedIn and Gain Competitive Insights

Shaily Dangi

Oct 8, 2024

How to Use LinkedIn Analytics with SocialSense for a Strong Personal Branding Strategy

Shaily Dangi

Oct 7, 2024

How to Track and Engage Key Connections on LinkedIn for Better Networking with SocialSense

Shaily Dangi

Sep 22, 2024

How to Boost LinkedIn Engagement by Commenting on Tracked Posts with SocialSense

Shaily Dangi

Sep 20, 2024

How to Add and Track LinkedIn Contacts for Real-Time Insights on Influential Profiles

Shaily Dangi

Sep 24, 2024

How to Use SocialSense’s Extension to Track and Optimize Your LinkedIn Profile Performance

Shaily Dangi

Sep 24, 2024

Creating a Viral Post: Strategies, Case Studies, and Industry Insights for Modern Digital Success

Shaily Dangi

Sep 24, 2024

LinkedIn’s AI Training Policy Reversal in the UK: Key Changes and What It Means for Users

Fatema Patel

Sep 20, 2024

Elevate Your Executive Presence: 7 Essential Practices for a Standout LinkedIn Profile

Fatema Patel

Sep 20, 2024

How to Cancel LinkedIn Premium: A Simple Step-by-Step Guide

Fatema Patel

Sep 19, 2024

7 Essential LinkedIn Tips for Students to Build a Strong Profile and Network

Fatema Patel

Sep 18, 2024

How to Use the Best LinkedIn Images: Top Design Tips and Resources

Fatema Patel

Sep 18, 2024

How to Find Remote Jobs on LinkedIn: Job Search Strategies and Work-from-Home Tips

Fatema Patel

Sep 18, 2024

How to Add LinkedIn Learning Certificates to Your Profile and Showcase Your Skills

Fatema Patel

Sep 18, 2024

How to Choose the Best LinkedIn Cover Photo to Showcase Your Personal Brand and Achievements

Fatema Patel

Sep 18, 2024

How to Choose the Best LinkedIn Profile Picture for a Professional and Impactful First Impression

Fatema Patel

Sep 13, 2024

How to Create an Engaging LinkedIn Post: Best Practices for Content Creation and Formatting

Fatema Patel

Sep 13, 2024

LinkedIn Profile Link: How to Customise and Share Your LinkedIn URL Effectively

Fatema Patel